Targeting Onsite A/B Test

The A/B Test feature enables you to test multiple content variations in an (targeting) on-site campaign to identify the best-performing version. By distributing your audience across different variants and analyzing their engagement, you can optimize content effectiveness and improve conversion rates. The system uses statistical analysis to automatically determine the winning variant or allows manual winner selection if needed.

How to Access

- Navigate to Campaign > Targeting > On-Site.

- Click on the New button to create a campaign.

How to Use

1. Campaign Settings

On the Settings screen, configure the basic campaign details such as campaign name, schedule, website, platform (web or mobile web), audience, and trigger time. These are standard setup steps before enabling A/B testing.

2. Enable A/B Testing

- Scroll to the Testing section.

- Toggle A/B Testing ON to test multiple content variants.

- Click Next to move to the Content screen.

Enable A/B Testing

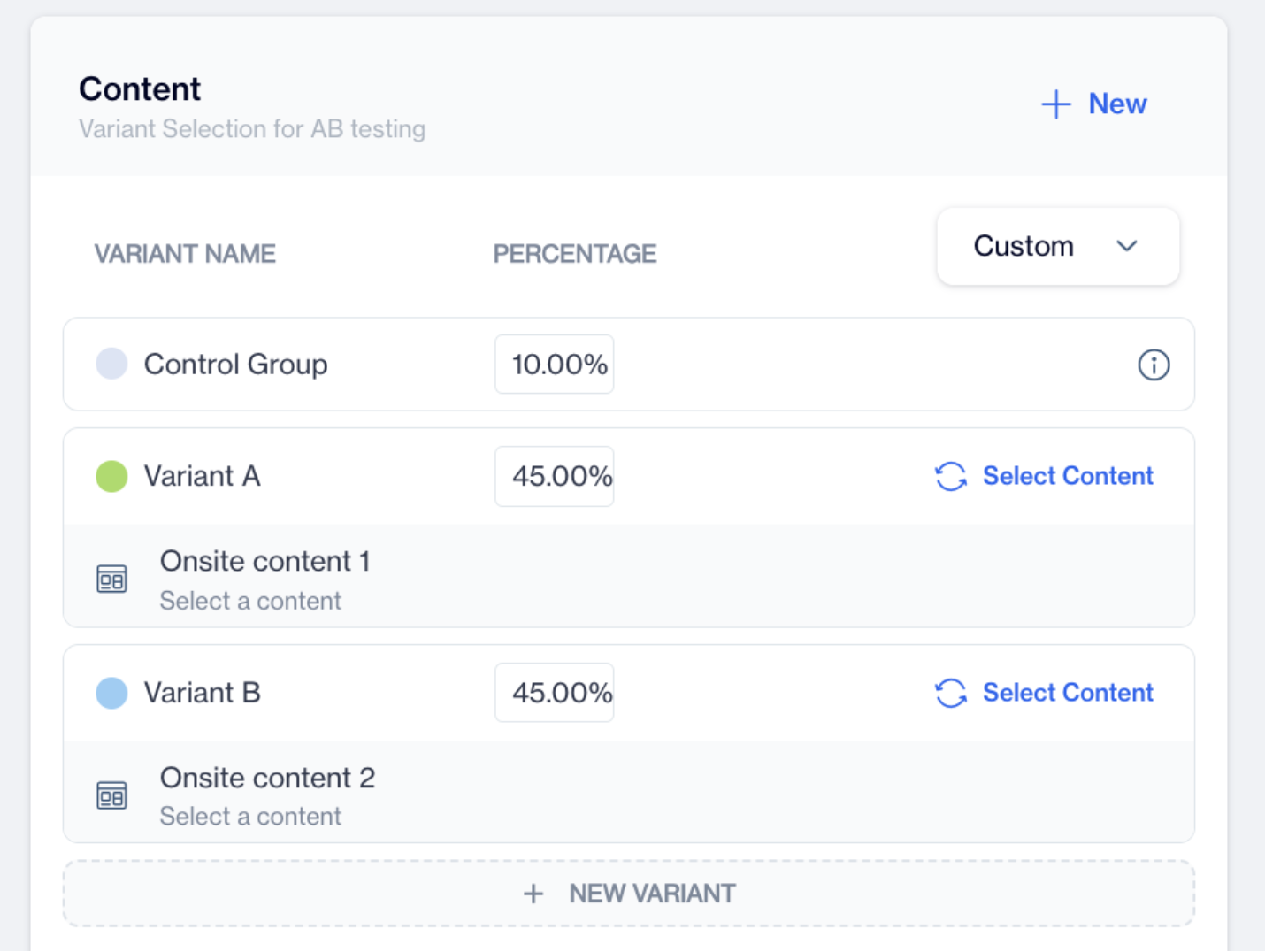

3. Configure Content Variants

- In the Variant Selection section:

- Predefined variants (e.g., Control Group, Variant A, Variant B) are displayed.

- Next to each variant, click the "Select Content" button to choose unique content for that specific variant.

Variant Selection

- Traffic Allocation:

- Select Equal to distribute traffic evenly.

- Select Custom to define your own percentages:

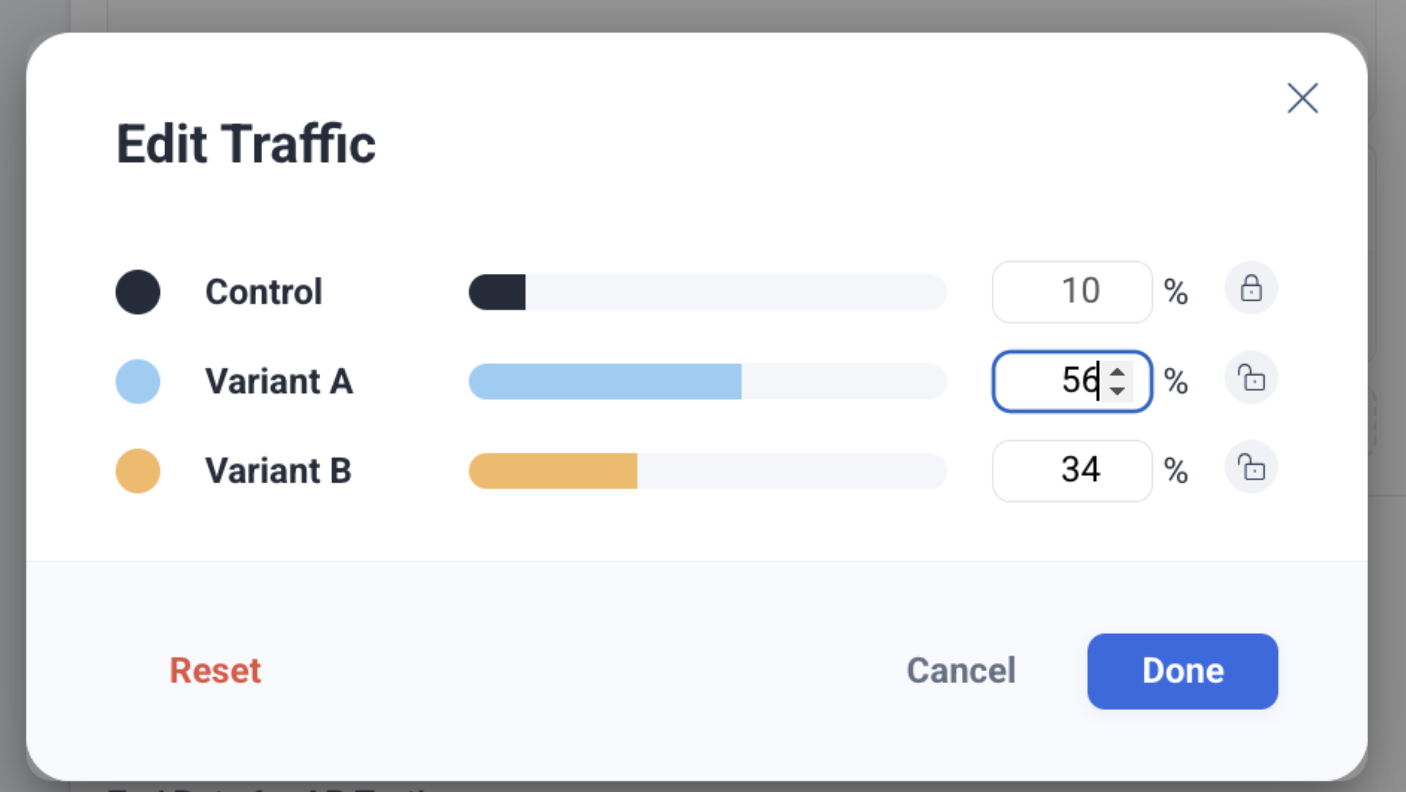

Edit Traffic

- Click the Pencil Icon to open the Edit Traffic modal.

- Adjust the percentage for each variant.

- Click the Lock button to save.

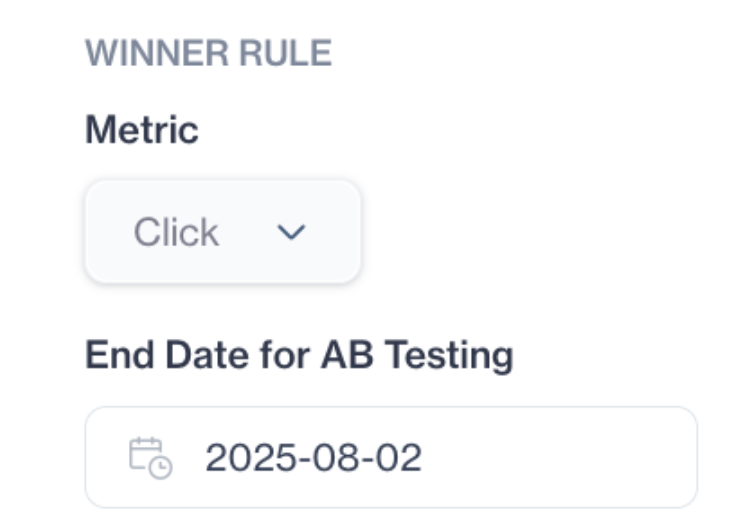

Winner Rule

Winner Rule

- Metric: Currently, only click-based conversion is supported.

- Control Group: The control group represents users who have not shown any content. Used exclusively for benchmarking and excluded from winner selection.

- Evaluation Criteria:

- Each variant must reach at least 100 visitors and 25 conversions before evaluation.

- A variant wins if it outperforms all others with ≥95% confidence.

- If no winner emerges, testing continues, or you can manually select a winner.

- Auto-Close: Tests close automatically after 30 days if no winner is found.

Winner Logic

Statistical Model

The system uses a two-proportion Z-test to compare the click-through rate (CTR) of each variant against every other variant. A winner is declared only if one variant statistically outperforms all others.

The Z-test is a statistical method used to determine if the difference in performance (conversion rate) between two variants is significant or if it happened by chance. In the context of A/B testing, it helps decide whether one variant truly performs better than another with high confidence.

Formula

Where:

- p₁ = Conversion rate of Variant A = clicks_A / users_A

- p₂ = Conversion rate of Variant B = clicks_B / users_B

- n₁, n₂ = Number of users in Variant A and B

- x₁, x₂ = Number of clicks in Variant A and B

- 𝑝̂ (p-hat) = Pooled conversion rate (x1+x2)/(n1+n2)

These comparisons continue until a statistically significant winner is identified.

End Date for A/B Testing

You should define a specific end date for your A/B test:

- Use the calendar picker to select the End Date for A/B Testing.

- The test will automatically stop on this date if no winner has been declared earlier.

- If an end date is not manually set, the system will run the test for up to 30 days by default.

This allows you to control the duration of your test and ensure timely evaluation of results.

Reports

Viewing and Understanding A/B Test Results

How to Access Reports

- Go to Campaign > Targeting > On-Site.

- Locate your campaign in the listing view.

- Click on the Report icon next to the campaign name.

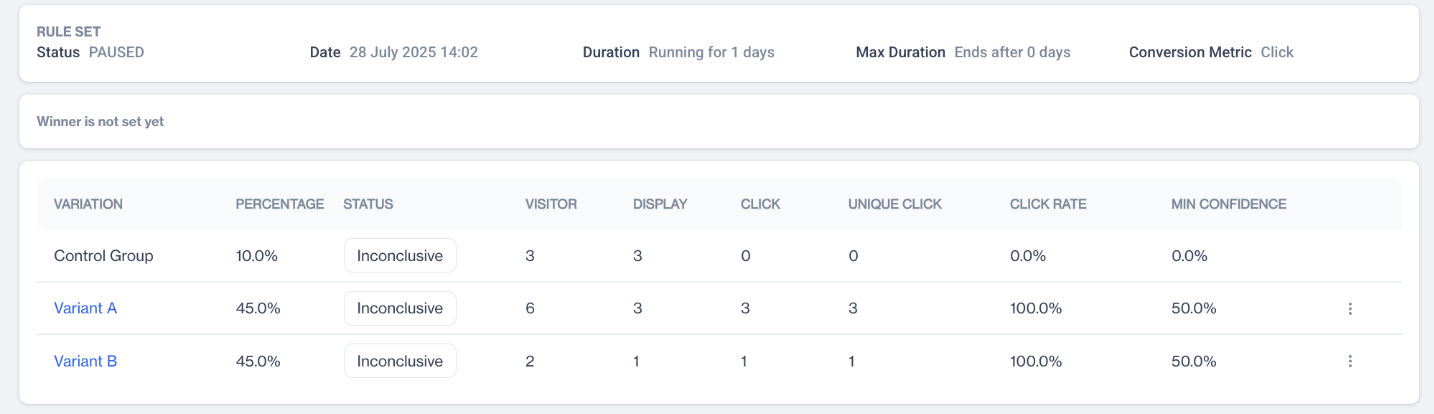

Report Screen Overview

On the report screen, you will see detailed test performance metrics and status indicators.

Report

Rule Set Information

- Status: Current state of the test (e.g., Running, Paused).

- Date: The date when the test started.

- Duration: How long the test has been running.

- Max Duration: Maximum time the test is allowed to run (auto-closes after 30 days).

- Conversion Metric: The metric being measured (currently Click).

- Winner Status: Shows if a winner has been selected automatically or manually.

Variant Performance Table

The Variant Performance Table provides a detailed breakdown of each variant's test results:

- Variation: The name of the variant being tested (e.g., Control Group, Variant A, Variant B).

- Percentage: The traffic allocation percentage assigned to the variant.

- Status: The current evaluation state of the variant (e.g., Still Collecting, Inconclusive, Statistically Significant).

- Visitors: Total number of unique visitors who qualified for and entered this variant.

- Display: Number of times the variant content was shown to visitors.

- Click: Total number of clicks generated by this variant.

- Unique Clicks: Number of distinct users who clicked on the variant content.

- Click Rate (CTR): Percentage of visitors who clicked on the variant.

- Min Confidence: The statistical confidence level achieved for this variant compared to others (aiming for ≥95% for winner selection).

This table helps you track engagement, traffic distribution, and statistical confidence for each variant during the test.

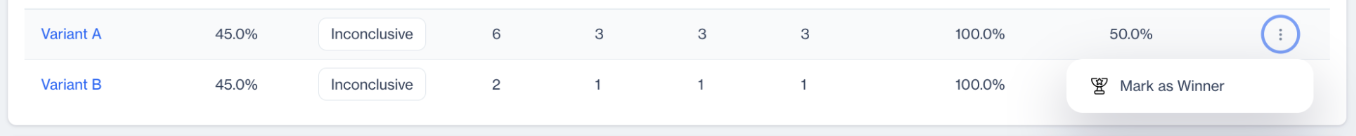

Each variant includes a "Mark as Winner" option for manual selection if desired.

Mark as Winner

Winner Selection Logic

- The system uses a Z-Test to compare click-through rates between variants.

- A variant must outperform all others with ≥95% confidence to be automatically declared the winner.

- The Control Group is excluded from winner evaluation and used for benchmarking only.

- If no variant meets the threshold, the test remains inconclusive.

Data Requirements

- Each variant must have at least 100 visitors and 25 conversions before analysis.

- Until this threshold is met, variants remain in “Still Collecting” status.

When a Winner Is Declared

- If one variant statistically beats all others:

- It is automatically selected as the winner.

- All traffic will be redirected to the winning variant.

Manual Selection

- If no automatic winner is found, you can manually select a winner by clicking "Mark as Winner".

- Once selected, the test is locked, and no further evaluation occurs.

Test Duration and Expiry

- Tests run for a maximum of 30 days.

- If no winner is determined within this period, the test is automatically closed as inconclusive.

Variant Statuses

You may see the following statuses for each variant:

- Still Collecting → Not enough data gathered.

- Statistically Significant → Variant has beaten all others with ≥95% confidence.

- Not Significant → Variant did not show strong results.

- Inconclusive → No clear winner identified.

- Manual Winner → Winner selected manually by the user.

Updated 4 months ago